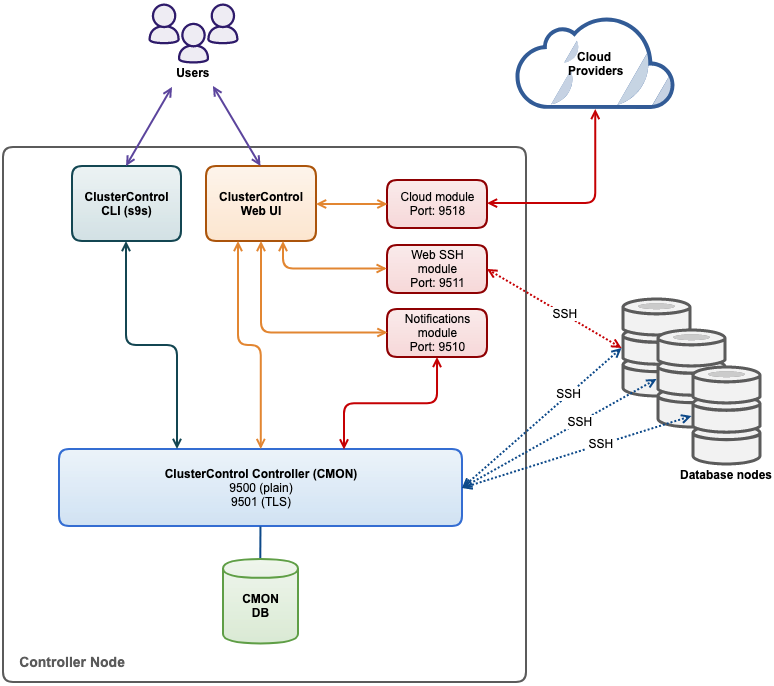

ClusterControl consists of a number of components:

| Component | Package Name | Role |

|---|---|---|

| ClusterControl Controller (cmon) | clustercontrol-controller | The brain of ClusterControl. A backend service performing automation, management, monitoring and scheduling tasks. All the collected data will be stored directly inside the CMON database. |

| ClusterControl GUI | clustercontrol2 | A modern web user interface to visualize and manage the cluster. It interacts with the CMON controller via a remote procedure call (RPC). |

| ClusterControl SSH | clustercontrol-ssh | An optional package introduced in ClusterControl 1.4.2 for ClusterControl’s web-based SSH console. Only works with Apache 2.4+. |

| ClusterControl Notifications | clustercontrol-notifications | An optional package introduced in ClusterControl 1.4.2 providing a service and interface for integration with third-party messaging and communication tools. |

| ClusterControl Cloud | clustercontrol-cloud | An optional package introduced in ClusterControl 1.5.0 providing a service and interface for integration with cloud providers. |

| ClusterControl Cloud File Manager | clustercontrol-clud | An optional package introduced in ClusterControl 1.5.0 providing a command-line interface to interact with cloud storage objects. |

| ClusterControl Multi-Controller | clustercontrol-mcc | An optional package introduced in ClusterControl 2.0.0 providing a centralized management of multiple ClusterControl Controller instances, also known as ClusterControl Operations Center. |

| ClusterControl Proxy | clustercontrol-proxy | An optional package introduced in ClusterControl 2.0.0 providing a controller proxying service for ClusterControl Operations Center. |

| ClusterControl CLI | s9s-tools | An open-source command-line tool to manage and monitor clusters provisioned by ClusterControl. |

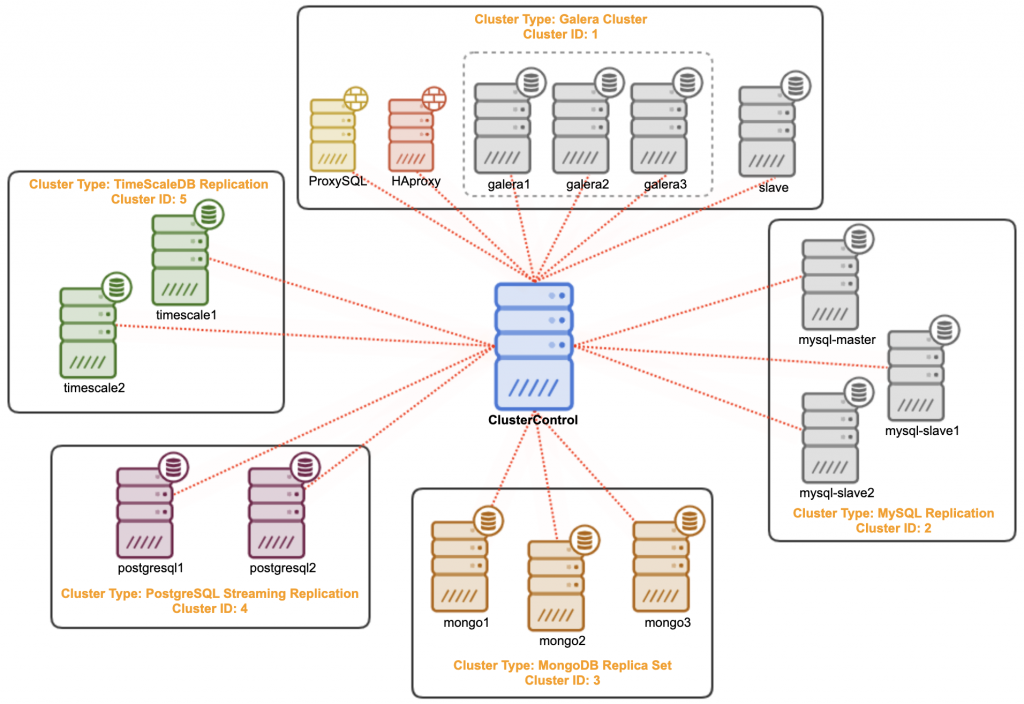

ClusterControl components must reside on an independent node apart from your database cluster. For example, if you have a three-node Galera cluster, ClusterControl should be installed on the fourth node. Following is an example deployment of having a Galera cluster with ClusterControl:

Once the cmon service is started, it would load up all configuration options inside /etc/cmon.cnf and /etc/cmon.d/cmon_*.cnf (if exists) into CMON database. Each CMON configuration file represents a cluster with a distinct cluster ID. It starts by registering hosts, collecting information and periodically perform check-ups and scheduled jobs on all managed nodes through SSH. Setting up a passwordless SSH is vital in ClusterControl for agentless management purposes. For monitoring, ClusterControl can be configured with both agentless and agent-based setup, see Monitoring Operations for details.

ClusterControl connects to all managed nodes as os_user using SSH key defined in ssh_identity inside CMON configuration file. Details on this are explained under the Passwordless SSH section.

What user really needs to do is to access ClusterControl UI located at https://{ClusterControl_host}/clustercontrol and start managing your database infrastructure from there. You can begin by importing an existing database cluster or create a new database server or cluster, on-premises or in the cloud. ClusterControl supports monitoring multiple clusters and cluster types under a single ClusterControl server as shown in the following figure:

ClusterControl controller exposes all functionality through remote procedure calls (RPC) on port 9500 (authenticated by an RPC token), port 9501 (RPC with TLS) and integrates with a number of modules like notifications (9510), cloud (9518) and web SSH (9511). The client components, ClusterControl UI or ClusterControl CLI interact with those interfaces to retrieve monitoring data (cluster load, host status, alarms, backup status, etc.) or to send management commands (add/remove nodes, run backups, upgrade a cluster, etc.).

The following diagram illustrates the architecture of ClusterControl:

ClusterControl has minimal performance impact especially with agent-based monitoring setup and will not cause any downtime to your database server or cluster. In fact, it will perform automatic recovery (if enabled) when it finds a failed database node or cluster.