Table of Contents

In ClusterControl v2, only agent-based monitoring via Prometheus is supported, while agentless monitoring (monitoring via SSH) is deprecated. If you insist to use the agentless monitoring approach, please use ClusterControl v1.

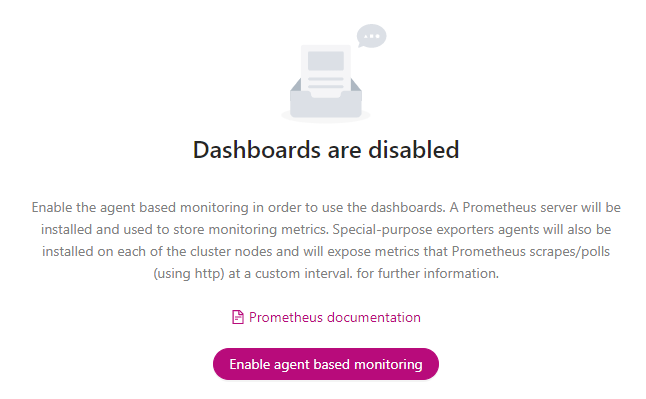

You will be presented with a notification panel to enable agent-based monitoring if it has not been activated, as shown below:

You can also click on Enable Monitoring under the Clusters page for the corresponding cluster. It will present the same configuration wizard as shown in the Enable Agent-Based Monitoring section down below.

To understand how ClusterControl performs monitoring jobs, see Monitoring Operations.

Enable Agent-Based Monitoring

Opens a step-by-step deployment wizard to enable agent-based monitoring.

For a new Prometheus deployment, ClusterControl will install the Prometheus server on the target host and configure exporters on all monitored hosts according to their role for that particular cluster. If you choose an existing Prometheus server, deployed by ClusterControl, it will connect to the data source and configure the Prometheus exporters accordingly.

Configuration

This section will help users to configure the Prometheus monitoring server.

| Field | Description |

|---|---|

| Select Host |

|

| Scrape Interval |

|

| Data Retention |

|

| Data Retention Size |

|

Exporters

Exporter aggregates and imports data from a non-Prometheus to a Prometheus system, and acts as the monitoring agent (thus the “agent-based monitoring” term).

In the deployment wizard, the Node exporter and Process exporter will be available for all nodes. The subsequent exporters’ configuration will depend on the cluster type and role. For example, a Percona XtraDB Cluster with ProxySQL will have additional MySQL exporter and ProxySQL exporter sections. For each exporter, you may customize the scrape interval and arguments to be passed when running the agent.

For the list of supported exporters and their configurations, see Supported Exporters.

| Field | Description |

|---|---|

| Scrape Interval |

|

| Arguments |

|

To understand how ClusterControl installs and configures the Prometheus server and all exporters, see Agent-Based Monitoring.

Supported Exporters

All monitored nodes are to be configured with at least three exporters (depending on the node’s role):

- Process exporter (port 9011)

- Node/system metrics exporter (port 9100)

- Database or application exporters:

-

- MySQL/MariaDB exporter (port 9104)

- MongoDB exporter (port 9216)

- PostgreSQL/TimescaleDB exporter (port 9187)

- ProxySQL exporter (port 42004)

- HAProxy exporter (port 9101)

- PgBouncer exporter (port 9127)

- Redis exporter (port 9121)

- SQL Server for Linux exporter (port 9399)

On every monitored host, ClusterControl will configure and daemonize the exporter process using a program called daemon. Thus, the ClusterControl host is recommended to have an Internet connection to install the necessary packages and automate the Prometheus deployment. For offline installation, the packages must be pre-downloaded into /var/cache/cmon/packages on ClusterControl node. For the list of required packages and links, please refer to /usr/share/cmon/templates/packages.conf. Apart from the Prometheus scrape process, ClusterControl also connects to the process exporter via HTTP calls directly to determine the process state of the node. No sampling via SSH is involved in this process.

With agent-based monitoring, ClusterControl depends on a working Prometheus for accurate reporting on management and monitoring data. Therefore, Prometheus and exporter processes are managed by the internal process manager thread. A non-working Prometheus will have a significant impact on the CMON process.

Since ClusterControl 1.7.3 allows multi-instance per single host (only for PostgreSQL-based clusters), ClusterControl takes a conservative approach by automatically configuring a different exporter port if there are more than one same processes to monitor to avoid port conflict by incrementing the port number for every instance. Supposed you have two ProxySQL instances deployed by ClusterControl, and you would like to monitor them both via Prometheus. ClusterControl will configure the first ProxySQL’s exporter to be running on the default port, 42004 while the second ProxySQL’s exporter port will be configured with port 42005, incremented by 1.

The collector flags are configured based on the node’s role, as shown in the following table (some exporters do not use collector flags):

| Exporter | Collector Flags |

|---|---|

mysqld_exporter |

|

node_exporter |

|

Monitoring Dashboards

Dashboards are composed of individual monitoring panels arranged on a grid. ClusterControl pre-configures a number of dashboards depending on the cluster type and host’s role. The following table explains them:

| Cluster/Application type | Dashboard | Description |

|---|---|---|

| All clusters | System Overview | Provides panels of host metrics and usage for an individual host. |

| Cluster Overview | Provides selected host and database metrics for all hosts for comparison. | |

| MySQL/MariaDB-based clusters | MySQL Server – General | Provides panels of general database metrics and usage for the individual database node. |

| MySQL Server – Caches | Provides important cache-related metrics for the individual database node. | |

| MySQL InnoDB Metrics | Provides important InnoDB-related metrics for the individual database node. | |

| MySQL Replication | MySQL Replication | Provides panels related to replication for the individual database node. |

| Galera Cluster | Galera Overview | Provides cross-server Galera cluster metrics for all database nodes. |

| Galera Server Charts | Provides panels related to Galera replication metrics for the individual database node. | |

| ProxySQL | ProxySQL Overview | Provides important ProxySQL metrics for individual ProxySQL nodes. |

| HAProxy | HAProxy Overview | Provides important HAProxy metrics for an individual node. |

| PostgreSQL | PostgreSQL Overview | Provides panels of general database metrics and usage for an individual database node. |

| TimescaleDB | TimescaleDB Overview | Provides panels of general database metrics and usage for the individual database node. |

| MongoDB Sharded Cluster | MongoDB Cluster Overview | Provides panels related to all Mongos of the cluster. |

| MongoDB ReplicaSet/Sharded Cluster | MongoDB ReplicaSet | Provides panels related to a replica set for the individual database hosts. |

| MongoDB ReplicaSet/Sharded Cluster | MongoDB Server | Provides panels of general database metrics and usage for the individual database host. |

| Redis | Redis Overview | Provides important ProxySQL metrics for individual Redis nodes. |

| Microsoft SQL Server | MSSQL Overview | Provides panels related to Microsoft SQL Server metrics for individual SQL server nodes. |

When clicking on the gear icon (top-right), the following functionalities are available:

| Field | Description |

|---|---|

| Exporters |

|

| Configuration |

|

| Re-enable Agent Based Monitoring |

|

| Disable Agent Based Monitoring |

|

| Enable Tooltip Sync |

|

| Disable Tooltip Sync |

|

The monitoring panels’ section provides the following functionalities:

| Field | Description |

|---|---|

| Dashboard |

|

| Host |

|

| Range Selection |

|

| Zoom In Time Range (+) |

|

| Zoom Out Time Range (-) |

|

| Refresh Every |

|

For more info on how ClusterControl performs monitoring jobs, see Monitoring Operations. To learn more about Prometheus monitoring systems and exporters, please refer to Prometheus Documentation.